Historical Perspectives

The Birth of Computing Across Cultures

Planning with Conditions and Choices

In the sprawling narrative of computing, the early chapters are not written by a single inventor, culture, or country. Instead, they are a mosaic of contributions from diverse civilizations, each adding a unique piece to the puzzle. This section explores these early developments in computing from a global perspective, highlighting the often-overlooked contributions from various cultures.

Ancient Algorithms and Number Systems

- Mesopotamian Origins: Our journey begins in ancient Mesopotamia, where the concept of algorithms first took root. Using their complex understanding of mathematics and astronomy, the Mesopotamians laid the groundwork for computational thinking.[1]

- Indian Innovations: Moving to India, we find the origins of the binary number system, which forms the basis of modern computing. The Indian scholar Pingala, as early as the 2nd century BC, conceptualized binary numbers through the Chandas Shastra, an ancient Sanskrit text on prosody.[2]

- Chinese Contributions: In China, the invention of the abacus around 500 BC marked a significant step in the development of computational tools. This simple yet effective device facilitated complex calculations, showcasing early human ingenuity in developing computational aids.[3]

The Middle Eastern Influence

- The Golden Age of Islam: The Islamic Golden Age saw scholars like Al-Khwarizmi (whose name gave us the term “algorithm”) make significant advances in mathematics and science. His works on algebra were especially influential in developing systematic methods to solve linear and quadratic equations, a cornerstone in the evolution of computational logic.[4]

European Advancements

- The European Renaissance: Fast forward to Renaissance Europe, where figures like Blaise Pascal and Gottfried Wilhelm Leibniz made strides in mechanical computing. Pascal’s work in creating the Pascaline, an early mechanical calculator, and Leibniz’s development of the stepped reckoner laid foundational stones for the future of mechanical computing devices.[5]

The African and Pre-Columbian Contributions

- Africa’s Rich Mathematical Heritage: Often underrepresented in the history of computing, African cultures have a rich heritage of mathematical concepts. From the intricate geometric patterns in art to sophisticated architectural designs, these cultures have demonstrated complex understanding and application of mathematical principles.[6]

- Pre-Columbian American Innovations: Pre-Columbian civilizations like the Maya developed complex calendrical systems and hieroglyphic writing in the Americas, indicating advanced mathematical and astronomical understanding.[7]

The Mosaic Comes Together

As we traverse through these diverse cultural landscapes, it becomes evident that the birth of computing was a global phenomenon enriched by a multitude of cultures and civilizations. Each contributed to shaping the fundamental concepts and tools that would, centuries later, culminate in the development of modern computing. Understanding this rich, multicultural computing heritage broadens our historical perspective and instills a deeper appreciation for the diverse contributions that have shaped the technologies we use today.

In this global narrative, computing emerges as a technical discipline and a field deeply rooted in human culture and intellectual history. This understanding is crucial for students as they navigate a world where technology continues to evolve rapidly, influenced by and influencing many cultures.

Evolution of Computing

The Post-War Western Boom and Its Global Ripple Effect

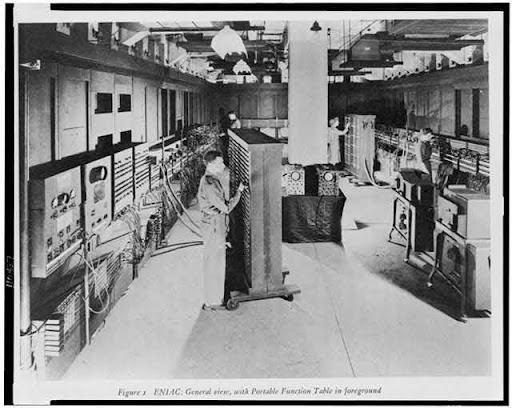

Following World War II, the United States emerged as a crucible for modern computing, driven by military needs and academic curiosity. This era birthed machines like the ENIAC (Electronic Numerical Integrator and Computer), considered one of the first true computers, primarily designed for calculating artillery firing tables. Across the Atlantic, Europe was just a little behind. The United Kingdom, for instance, made significant contributions with inventions like the Colossus and EDSAC (Electronic Delay Storage Automatic Calculator), which were pivotal in breaking wartime codes and advancing the field of computing. These early machines set the stage for the digital age, embodying the shift towards automation and complex computation. They laid down the fundamental architectures and principles defining computing for decades, influencing global technological trends and setting a template for future innovations.

The Asian Leap: Japan’s Electronic Renaissance and India’s Software Surge

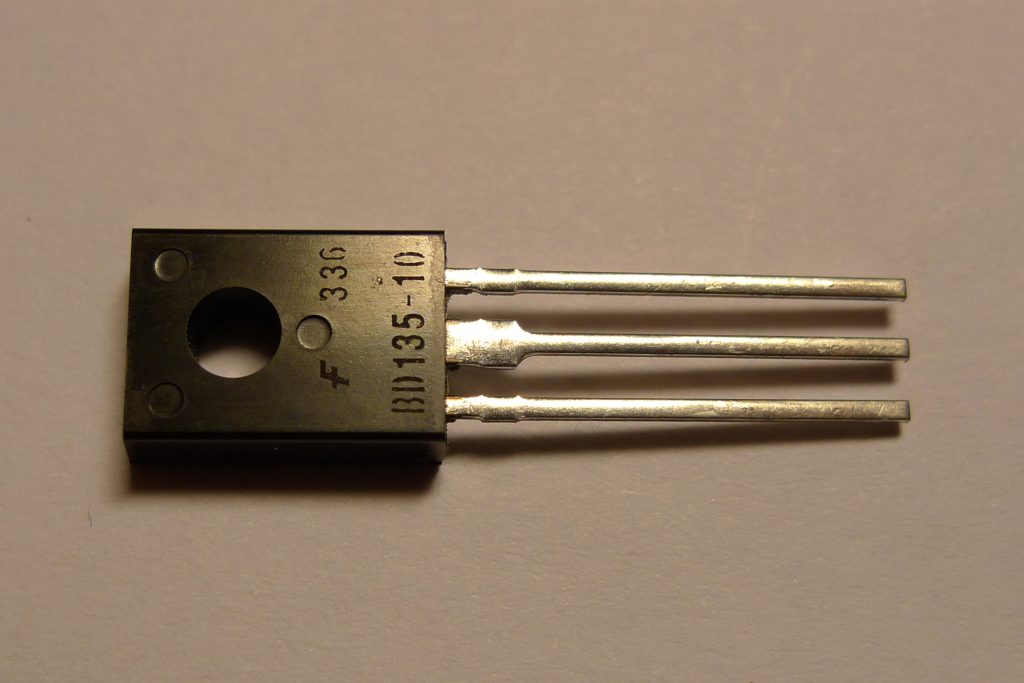

Japan’s post-war journey is a remarkable tale of resilience and technological ambition. Embracing technology as a means of economic revival, Japanese companies like Sony and Toshiba pioneered transistor technology, which played a crucial role in shrinking electronic devices, leading to the compact, powerful gadgets we use today. Meanwhile, India, leveraging its proficiency in English and strong mathematical foundation, carved out a niche in the software domain. By the late 20th century, India had established itself as a global powerhouse in software development and IT services, challenging stereotypes and altering the global perception of the software industry. India’s rise as an IT hub demonstrated how combining skilled human resources and strategic positioning could propel a nation to the forefront of a technological sector.[8][9]

The Soviet Narrative: An Alternate Path in Computing

The Soviet Union’s journey in computing followed a distinct trajectory, molded by its political and ideological context. Seeking technological independence, Soviet scientists and engineers developed their line of computers, such as the MESM and BESM series. These machines were critical in various Soviet space and military projects, demonstrating that different socio-political contexts could create unique technological ecosystems. The Soviet computing story is a testament to how innovation can flourish under varying conditions and constraints and how these alternate paths can contribute to the broader narrative of global technological progress.[10]

The Internet Era: A Cultural Melting Pot

The advent of the Internet in the late 20th century marked a seismic shift in computing, transforming it from a tool for specific scientific and business applications to a universal platform for global connectivity. This era democratized information access and created a new space for cultural exchange. The Internet became a melting pot where diverse cultures interacted, shared, and sometimes clashed, leading to new forms of communication, socialization, and expression. It also spurred the creation of new industries and transformed existing ones, reshaping the global economic landscape. The rise of social media platforms further amplified these effects, making the Internet a central fixture in modern cultural and social life.

Addressing the Digital Divide: The Quest for Equitable Access

While the spread of computing and internet technology brought numerous advancements, it also highlighted stark disparities. The digital divide, a term used to describe the gap between those with easy access to digital technology and those without, became a pressing issue. Efforts to address this divide, such as providing affordable computing devices to underprivileged communities and improving internet infrastructure in remote areas, underscored the role of technology as both a driver of inequality and a tool for alleviation. These efforts reflected a growing recognition of the need to make technology accessible and beneficial for all, irrespective of geographical or socio-economic barriers. [11]

The Future: Cultural Dimensions of Emerging Technologies

Looking ahead, the evolution of computing continues to be influenced by various cultural factors. Data privacy, ethical considerations in artificial intelligence, and sustainable technology are increasingly at the forefront. These concerns reflect a growing global consciousness about the broader implications of technology on society and culture. The future path of computing evolution will likely be marked by a greater emphasis on ethical, social, and cultural considerations, shaping technology to be more responsive to a global community’s diverse needs and values.

Media Attributions

- Abacus © Image by succo from Pixabay is licensed under a CC BY-SA (Attribution ShareAlike) license

- Mayan calendar © Pixabay is licensed under a CC BY-SA (Attribution ShareAlike) license

- Large-Scale Digital Calculating Machinery © Library of Congress is licensed under a Public Domain license

- BD135 Transistor © Wikipedia is licensed under a CC BY-SA (Attribution ShareAlike) license

- Phone and money © Pixabay is licensed under a CC BY-SA (Attribution ShareAlike) license

- Robson, E. (1999). Mesopotamian mathematics, 2100-1600 BC: Technical constants in bureaucracy and education (Oxford Editions of Cuneiform Texts). Oxford University Press. ↵

- Kulkarni, A. (2007). Recursion and combinatorial mathematics in Chandashastra, 2007. ↵

- Yamazaki, Y. (1959). The origin of the chinese abacus. Memoirs of the Research Department of the Toyo Bunko (The Oriental Library), 18, 91-140. ↵

- Rashed, R. (1994). Al-Khwarizmi: The beginnings of algebra. Saqi Books. ↵

- Marguin, J. (1994). History of calculating instruments and machines, three centuries of thinking mechanics 1642-1942. Hermann. ↵

- Zaslavsky, C. (1999). Africa counts: Number and pattern in African cultures. Lawrence Hill Books. ↵

- Aveni, A. F. (1980). Skywatchers of ancient Mexico. University of Texas Press. ↵

- Riordan, M., & Hoddeson, L. (1997). Crystal fire: The invention of the transistor and the birth of the information age. W. W. Norton & Company. ↵

- Heeks, R. (1996). India’s software industry: State policy, liberalisation and industrial development. Third World Quarterly, 17(2), 275-298. ↵

- Gerovitch, S. (2002). From newspeak to cyberspeak: A history of Soviet cybernetics. MIT Press. ↵

- Norris, P. (2001). Digital divide: Civic engagement, information poverty, and the Internet worldwide. Cambridge University Press. ↵