How Generative AI Works

Warm-up

Word Association

Choose a few of these to discuss as a group:

- The dog chased its [blank].

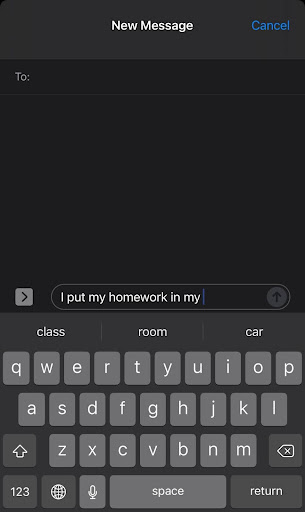

- I put my homework in my [blank].

- He hit the baseball with a [blank].

- She wore a beautiful red [blank].

- We watched the movie with a bucket of [blank].

- The teacher wrote on the [blank].

- During summer, I love to swim in the [blank].

- I read the entire book but didn’t understand the [blank].

- Every morning, she drinks a cup of [blank].

- He listened to his favorite song on the [blank].

- For my birthday, I got a new [blank].

- The astronaut looked out at the [blank].

- She likes to paint with water [blank].

- I play my favorite video game on the [blank].

- The athlete runs fast on the [blank].

- My favorite pizza is topped with [blank].

- The bear in the zoo loves to eat [blank].

- They cheered as their team scored a [blank].

There’s also a connection here to cell phone predictive texting.

Markov chains use likelihood as predictors for the next in a sequence of words. How is this predicted? It’s based on the texts that the model was trained on. Spend some time exploring these sources to better understand the process and the training data:

- Jill Walker Rettberg – exploration of training data for GPT-3

- What are large language models and how do they work?

- How Generative AI Really Works

- What is ChatGPT Doing and Why Does It Work?